After figuring out the right logic and confirm it works, I can start to pre-process the audio data. I’ve written a whole dedicated project for processing audio data.

One thing important is to first convert it to 22050 Hz if not already done, I’ve written a small bash script using sox to finish this job(it’s quite slow to do it with librosa, but I’ve included the code to read 44kHz sampling rate audio and output as 22kHz):

mkdir -p converted/"$1"/

for i in "$1"/*.wav; do

o=converted/"$1"/${i#"$1"/}

sox "$i" -r 22050 -c 1 "${o%}"

doneHere are some files explanation(Use Geralt as example):

- A folder with the name of the character containing all the audio files placed in the root of the project.

- run split.py, the Geralt_output folder will be created containing the split clips.

- (optional) select audio clips that are really small(likely to be a sigh and hmm) and move into a folder named _test, e.g. Geralt_test

- (optional) run transcribe.py, it will transcribe all the clips, {voice}_transcription.csv will be created

- (optional) run move.py, it reads the transcription.csv and moves all the files with transcription from test folder back to the output folder

- (optional) run rename.py, it renames the remaining clips in test folder with the sentence as the filename for easier manual checking

- (optional) after checking and deleting, the remaining clips in test folder should be retained, run clean.py to rename it back to normal and move back to the output folder

- run combine.py to merge the clips and insert fixed silence between clips. A folder {voice}_combined will be created.

split.py

def split_audio_to_list(source, preemph=True, preemphasis=0.8, min_diff=1500, min_size=3000, chunk_size=9000, db=50):

if preemph:

source = np.append(source[0], source[1:] - preemphasis * source[:-1])

split_list = librosa.effects.split(source, top_db=db).tolist()

i = len(split_list) - 1

while i > 0:

if split_list[i][-1] - split_list[i][0] > min_size:

now = split_list[i][0]

prev = split_list[i - 1][1]

diff = now - prev

if diff < min_diff:

split_list[i - 1] = [split_list[i - 1][0], split_list.pop(i)[1]]

else:

split_list.pop(i)

i -= 1

return [x for x in split_list if x[-1] - x[0] > chunk_size]split_audio_to_list is a function two return a list containing a tuple indicating the start and end index of each split clips in the whole audio array. The split function from librosa already returns a list like this: [(start_index1, end_index1), (start_index2, end_index2), …] After getting the split list, I run a while loop to check if each element in the list fits certain criteria, first checking minimum size of a clip by subtracting the end_index by start_index, then look at the previous clip and see the difference in their duration to make sure some short pauses aren’t separated (compare with the first element of the current tuple from the last element of the previous tuple)

def trim_custom(audio, begin_db=25, end_db=40):

begin = librosa.effects.trim(audio, top_db=begin_db)[1][0]

end = librosa.effects.trim(audio, top_db=end_db)[1][1]

return audio[begin:end]This is a custom trim and set the threshold of start and begin differently.

def split(audio_folder):

audio_list = glob.glob(f'{audio_folder}/*.wav')

if not os.path.exists(f'{audio_folder}_output'):

os.mkdir(f'{audio_folder}_output')

# if not os.path.exists('output_16k'):

# os.mkdir('output_16k')

for audio_file in audio_list:

filename = os.path.splitext(os.path.basename(audio_file))[0]

audio_array = librosa.load(audio_file, sr=sr)[0]

audio_split = split_audio_to_list(audio_array)

for i, part in enumerate(audio_split):

part_array = trim_custom(audio_array[slice(*part)])

# part_array_16k = librosa.resample(part_array, sr, 16000)

part_array *= 32767

# part_array_16k *= 32767

wav.write(f'{audio_folder}_output/{filename}_{i}.wav', sr, part_array.astype(np.int16))

# wav.write(f'{audio_folder}_output_16k/{filename}_{i}.wav', 16000, part_array_16k.astype(np.int16))This is the main code, nothing special, just looping through the audio and apply the function. As the data is loaded as np.float32, it has to be multiplied by 32767 to convert it back to np.int16 for SciPy to write the audio as PCM 16-bit, which is the original input format.

transcribe.py

So this is used originally for transcribing the split audio clips, but since I’ve realized that I could just glue them back together given the audios has normalized silence duration, I’ve realized I could use to figure out whether a clip has words or not. Likewise, it is required to add silence before the beginning of clips to recognize it correctly. And I’ve included a convert_16k.sh in the project since deepspeech is trained with 16kHz audio and it gives better results if the input audio is 16k.

To run this, you need to create a folder {voice}_test containing audio files you would like to check, so you just need to sort by file size in the output folder and move the smaller files into the test folder, in this case, it’s Geralt_test.

22kHz:

16kHz:(better)

And to add the silence, I only need to pad zeros at the beginning, as for how many zeros to add, it depends on the sampling rate. Here is 0.5s:

np.pad(audio, (int(sr * silence), int(sr * 0.5)), 'constant')To transcribe:

ds = Model('deepspeech-0.7.0-models.pbmm')

transcription = ds.stt(audio)The detailed code can be found on the GitHub repo. After running the script, a file named {voice}_transcription.csv is generated.

move.py

This file is the continuation from the transcription since I don’t want to do the file operation in the transcribe.py, I separate the moving part into a separate file named move.py. Basically, this file moves the files listed in the transcription CSV file that is not emptied(so it’s voice/content), and only the empty transcription audio files remain in the test folder.

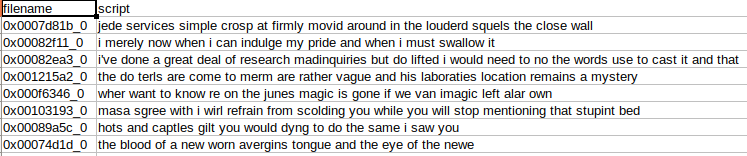

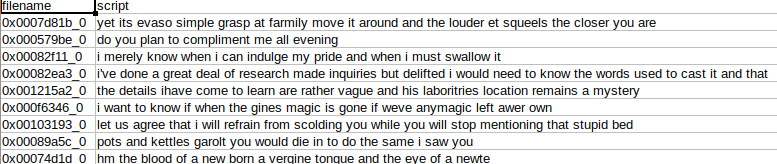

rename.py

So what do we do with the remaining file in the test folder? Do we delete them? Actually no, it would be better to check them one by one, because as it turns out, some actually are non-vocal that is required is clip like hmm and ah. To increase efficiency in checking, the file in the test folder will be renamed so that the original transcription from the game is in the filename. And the original full audio file is moved here as well so it can be compared easily.

The next step would be to place all the files in a playlist in a media player. I use VLC in the example(not many choices in Linux anyway).

And to delete some files if it’s really not needed.

clean.py

At this point, all files in the test folder should be put back to the output folder. So the audio doesn’t contain | pipe will be deleted since they are not the split clips and they are the original audio. Then the remaining files will be moved back to the output folder without the transcription in the file name.

def clean(voice):

audio_list = glob.glob(f'{voice}_test/*.wav')

for path in audio_list:

filename = os.path.splitext(os.path.basename(path))[0]

if '_' in filename:

if '|' in path:

filename = re.sub(r'\|.*\|', '', filename)

shutil.move(path, f'{voice}_output/{filename}.wav')

else:

os.remove(path)combine.py

Last but not least, we need to combine and audio back, so I used the intersperse function from more_itertools to insert zeros(silence) between each audio array in a list. Finally just need to concatenate all the items in a list to a single NumPy array and it’s ready to be saved as wav.

def combine(voice):

if not os.path.exists(f'{voice}_combined'):

os.mkdir(f'{voice}_combined')

audio_list = glob.glob(f'{voice}_output/*.wav')

audio_df = pd.DataFrame(audio_list, columns=['path'])

audio_df['filename'] = audio_df['path'].str.split('.', expand=True)[0].apply(lambda x: os.path.split(x)[1])

audio_df['name'], audio_df['number'] = zip(*audio_df['filename'].str.split('_').tolist())

audio_df.to_csv(f'{voice}_audio_df.csv', encoding='utf-8', index=False, sep='|', quoting=csv.QUOTE_NONE)

for name, name_df in audio_df.groupby('name'):

path_list = name_df.sort_values('number')['path'].to_list()

if len(path_list) > 1:

audio_slice = intersperse(np.zeros(int(sr * 1500 / 10000), dtype=np.int16), [wav.read(path)[1] for path in path_list])

# name_audio = trim_custom(np.concatenate([*audio_slice], axis=None))

name_audio = np.concatenate([*audio_slice], axis=None)

wav.write(f'{voice}_combined/{name}.wav', sr, name_audio.astype(np.int16))

else:

shutil.copy2(path_list[0], f'{voice}_combined/{name}.wav')Manual checking

For a character(Keira), since she doesn’t have many files, I think I should manually check the quality and verify the transcription. I’ve decided to listen to every single one of the audio clips.

Firstly, just repeat the whole process mentioned above. And this is the test folder. It turns out the transcription is wrong few times, and this also made me wonder if I should listen to the 1.2k files for Yennefer and 20k files for Geralt.

And there are just too many non-vocal parts that cannot be separated since there are no pauses between it and the start of the dialog. So I’ve decided to actually do the ‘rename’ process in the combined audio.

In the end, from 30+ mins to 22 mins. And I’ve edited over 10+ files.

Her voice:

DCTTS:(using LJ-Speech SSRN)