Let’s take a look at the web interface.

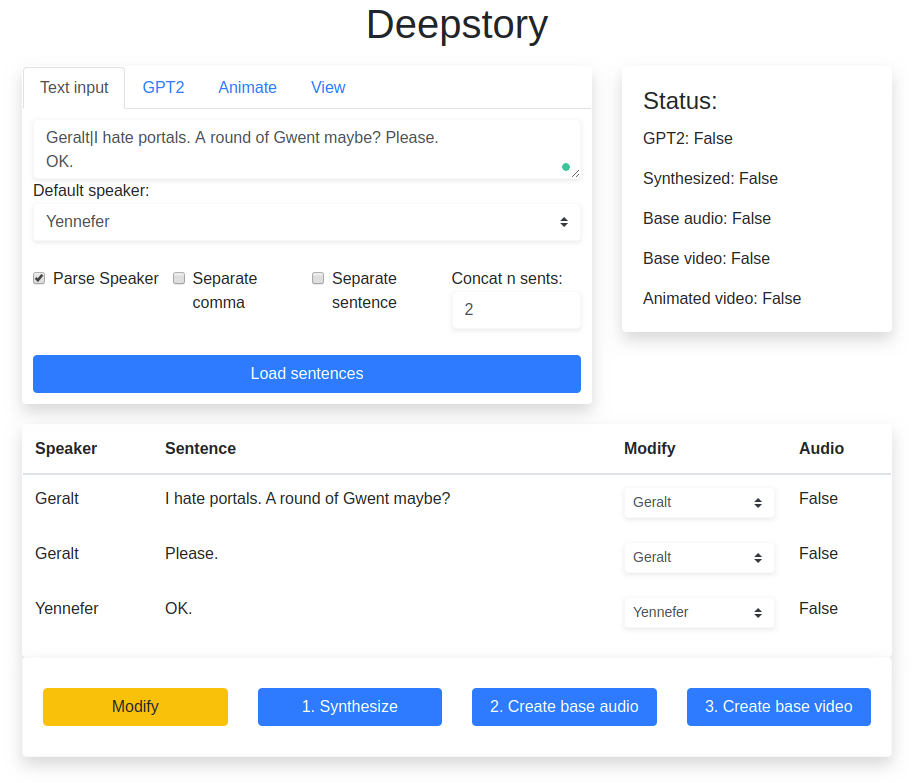

This is the main interface, the top left side is the tab that can be switched into a different interface. The right side is the status, that tells you the status inside the deepstory object. The bottom part is showing the loaded sentences, you can modify the speaker to any speaker that is in the model list. For the Text input interface, there’s a textarea that automatically adjust the height as you type in(jQuery), and to parse the speaker, you can denote a separator of either ‘:’ or ‘|’ to specify a speaker in that line. Notice that the second line doesn’t have a speaker defined because there’s no separator, so the default speaker is set to the second line. According to the concat n sents, I set it to 2, so even though there are 3 sentences in the first line, the first two sentences are merged and the third one goes to another new sentence. If separate comma is checked, sentence separation will include a comma and gives more ‘sentences’. The separate sentence function doesn’t work yet, as I have no need to use it right now.

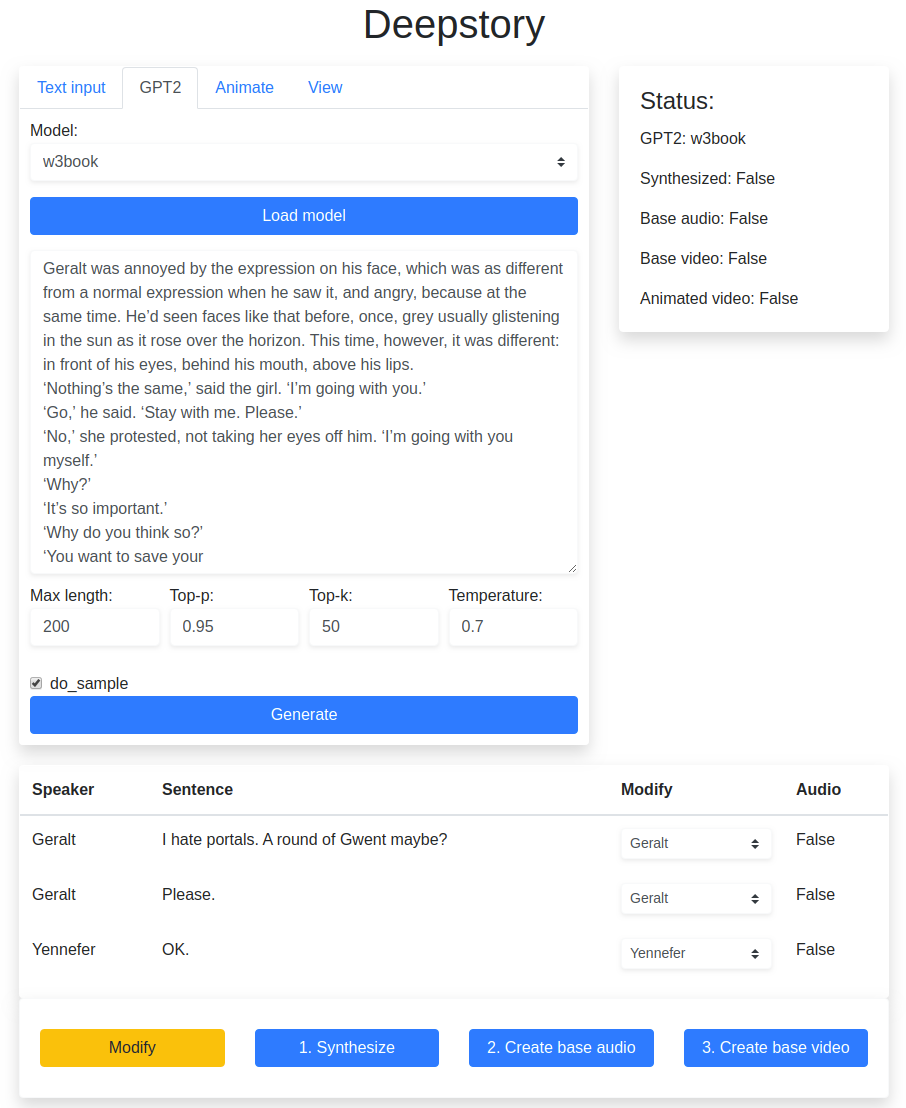

This is the GPT-2 interface. There’s a textarea as well. The photo shows the result after clicking generate. You can leave the textarea if there is no prefix in the content you want to generate. Before generation, a GPT-2 model must be loaded, and you can select your model you want in the select box. Below the textarea are the parameters, they are referenced from the transformers generate method of the model. There’s max_length, top-p, top-k, temperature, and do_sample. The usage can be referenced to the GPT-2 documentation.

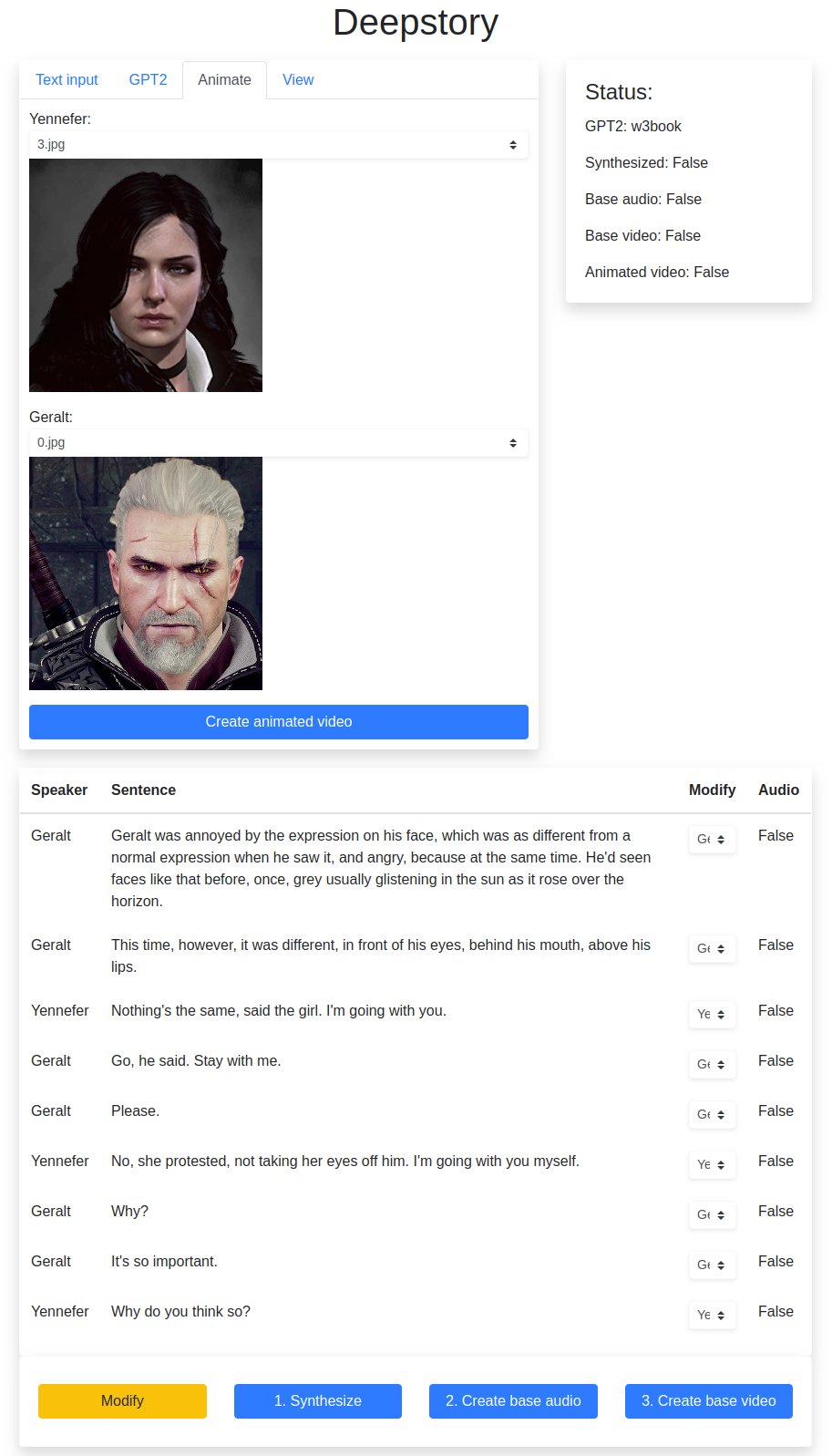

This is the animate interface. You can choose an image to represent the speaker, the image will then be animated. The sentences aren’t synthesized yet.

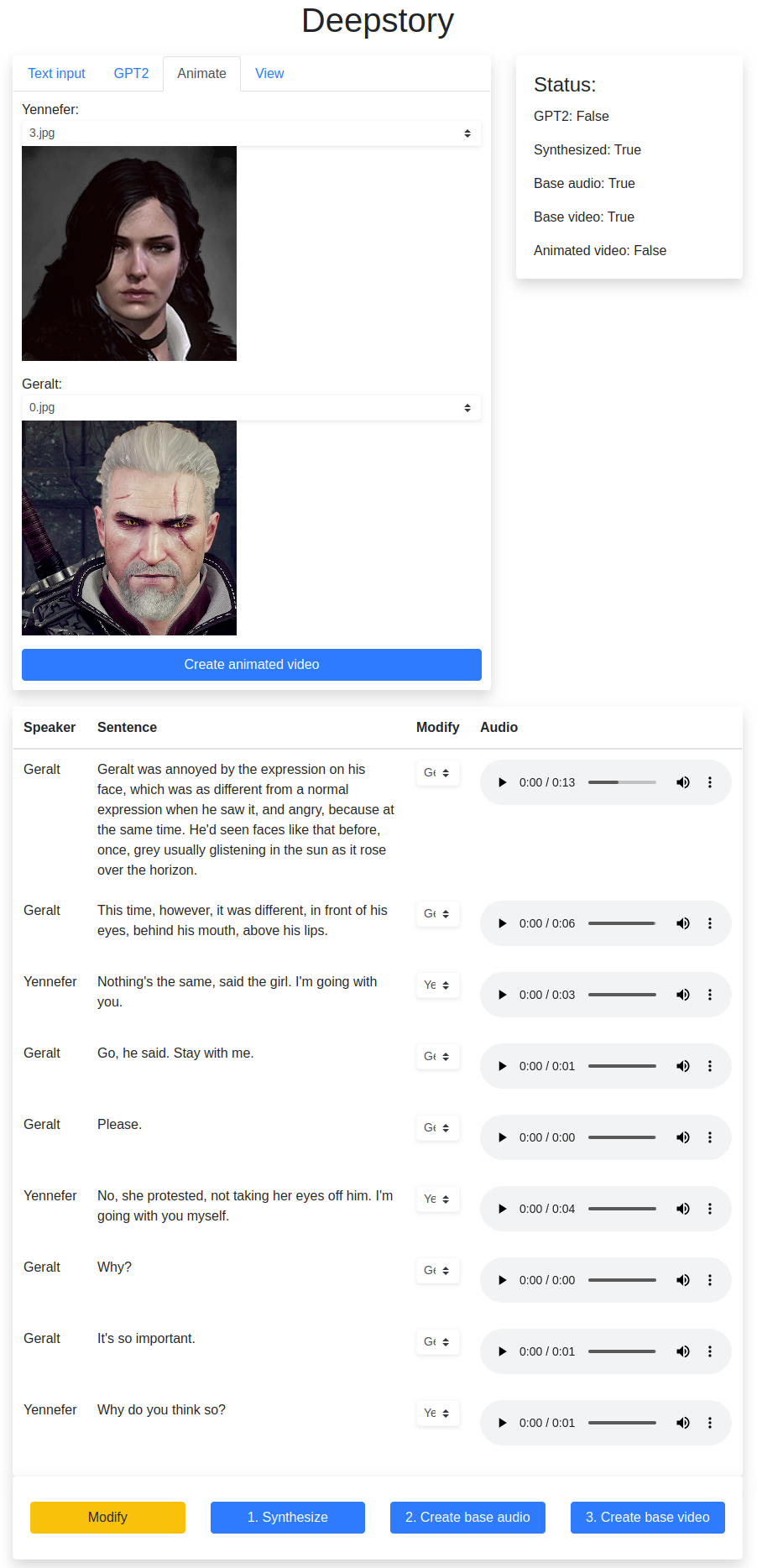

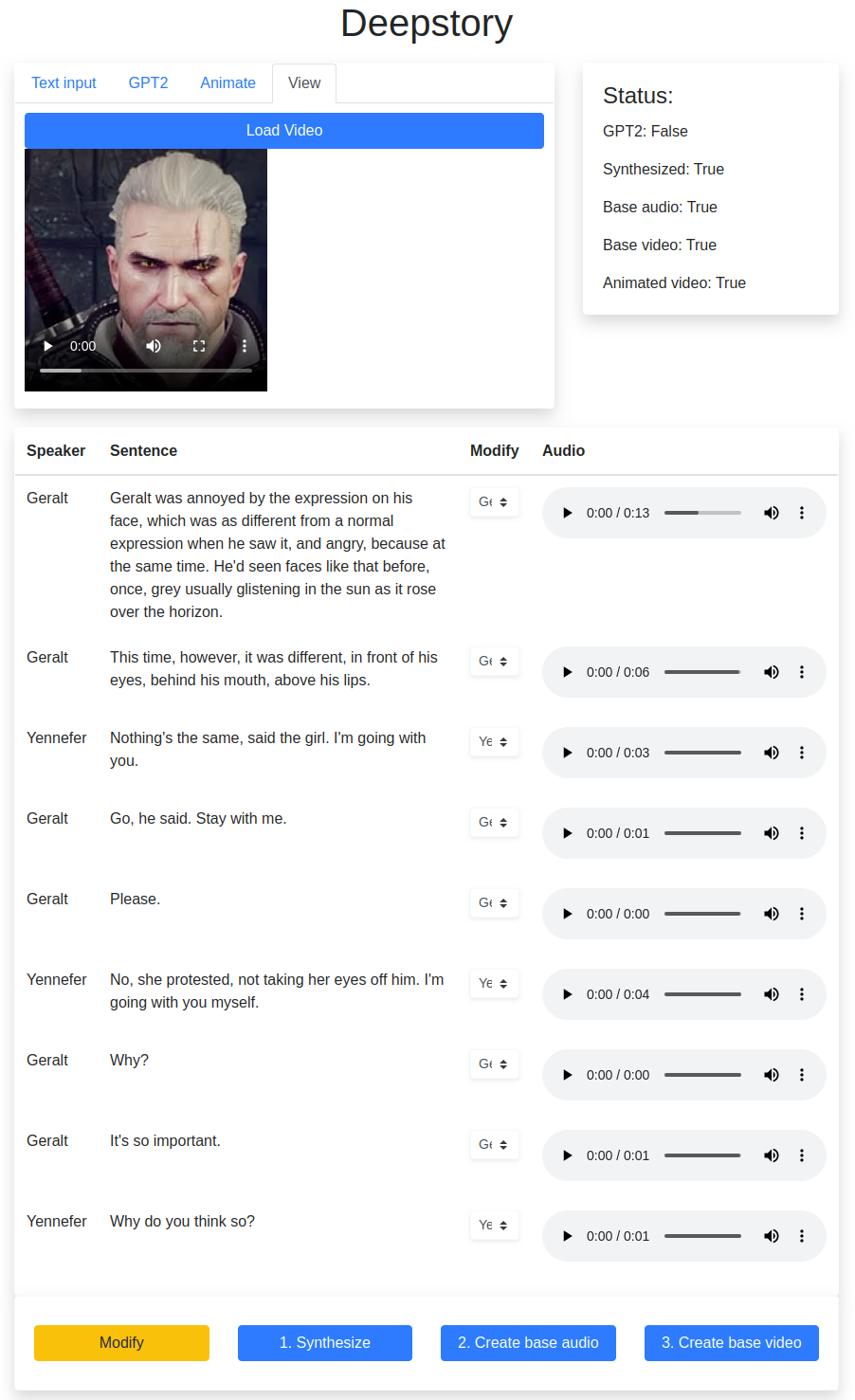

Here is the interface after synthesizing all the sentences. The audio can be instantly previewed. You can also modify the speaker again and re-synthesize the sentences. The image shows that I’ve also created the base audio and video.

After the animated video is generated, there’s an alert and the status indicates that it has been generated. In the view interface, you can click load video, and a video element is appended below the button and you can view the video or download it instantly.

Flask

So the whole project is written in Flask using Jinja2 to render the python codes into the html templates. I also have the js file put in the templates folder since it contains some url pointers from some function.

There are not many things special about the flask code, but here are several things I would like to mention and explain.

To simplify the codes of sending the response, I’ve written a function send_response.

def send_message(message, status=200):

response = make_response(message, status)

response.mimetype = "text/plain"

return responseThe response is shown in the web interface as an alert through jQuery ajax.

To parse data in GET method, the following code is used:

request.args.get('model')To parse data in POST method, the following code is used:

request.form.get('text')To parse JSON data, the following code is used:

request.jsonThe other things are the video loading and audio streaming.

This is the function handling audio streaming:

@app.route("/wav/<int:sentence_id>")

def stream(sentence_id):

response = make_response(deepstory.stream(sentence_id), 200)

response.mimetype = "audio/x-wav"

return responseIt points to a method in the deepstory class:

def stream(self, sentence_id=0, combined=False):

wav = self.wav if combined else self.sentence_dicts[sentence_id]['wav']

with BytesIO() as f:

scipy.io.wavfile.write(f, hp.sr, wav)

return f.getvalue()So instead of writing the file, I write the file in memory using BytesIO(). It returns the binary data using .getvalue(). And a response is created from the binary data with the mimetype “audio/x-wav”

For the video, it wouldn’t be very wise to keep the whole thing in memory, so the video is saved to disk, and to transfer the video, the following flask route is written:

@app.route('/video')

def video():

return send_from_directory(f'export', 'animated.mp4')The image is also same as video:

@app.route('/image/<path:filename>')

def image_viewer(filename):

return send_from_directory(f'data/images/', filename)And the corresponding jQuery code to load the image:

$("#animate").find("select").each(function(){

let img = $('<img />', {src: "image/" + $(this).val()});

img.insertAfter($(this));

}).on('change', function(){

$(this).parent().find("img").attr('src', "image/" + $(this).val());

});

$("#animate").submit(function (e) {

e.preventDefault();

$.ajax({

type: "GET",

url: this.action,

data: $(this).serialize(),

success: function (message) {

alert(message);

refresh();

},

error: function (response) {

alert(response.responseText);

}

});

});And the html template in index.html:

<select class="border rounded border-light shadow-sm custom-select custom-select-sm"

name="{{ speaker }}">

{% for choice_speaker, images in image_dict.items() %}

<optgroup label="{{ choice_speaker }}">

{% for image in images %}

<option value="{{ choice_speaker }}/{{ image }}"

{% if choice_speaker == speaker and loop.index0 == 0 %}

selected{% endif %}>{{ image }}</option>

{% endfor %}

</optgroup>

{% endfor %}

</select>