Tensorboard is an important indicator for the current training progress. Even though the DCTTS implementation that I opt for is written in Pytorch, it also supports writing the log into TensorBoard format by using SummaryWriter from TensorBoardx.

I’ve tried training on 10 colabs simultaneously with 5 different accounts(max 2 colabs per account) and it accelerated the time required for training.

So something I’ve noticed, the change in batch size seems to shift the whole graph up or down, and learning rate would need to be experimented few times so that it can be converged to minimum more efficiently. And the most frequent thing is that I can see the loss decrease and over time it would increase back, so definitely some over-fitting, but I can’t be sure if it’s good or not because the synthesized the sounds better in some case. Maybe a bit over-fitting is actually acceptable.

Here are just some screenshot:

comparing different learning rate

mainly check valid loss and valid attention loss

some slight over-fitting for ssrn

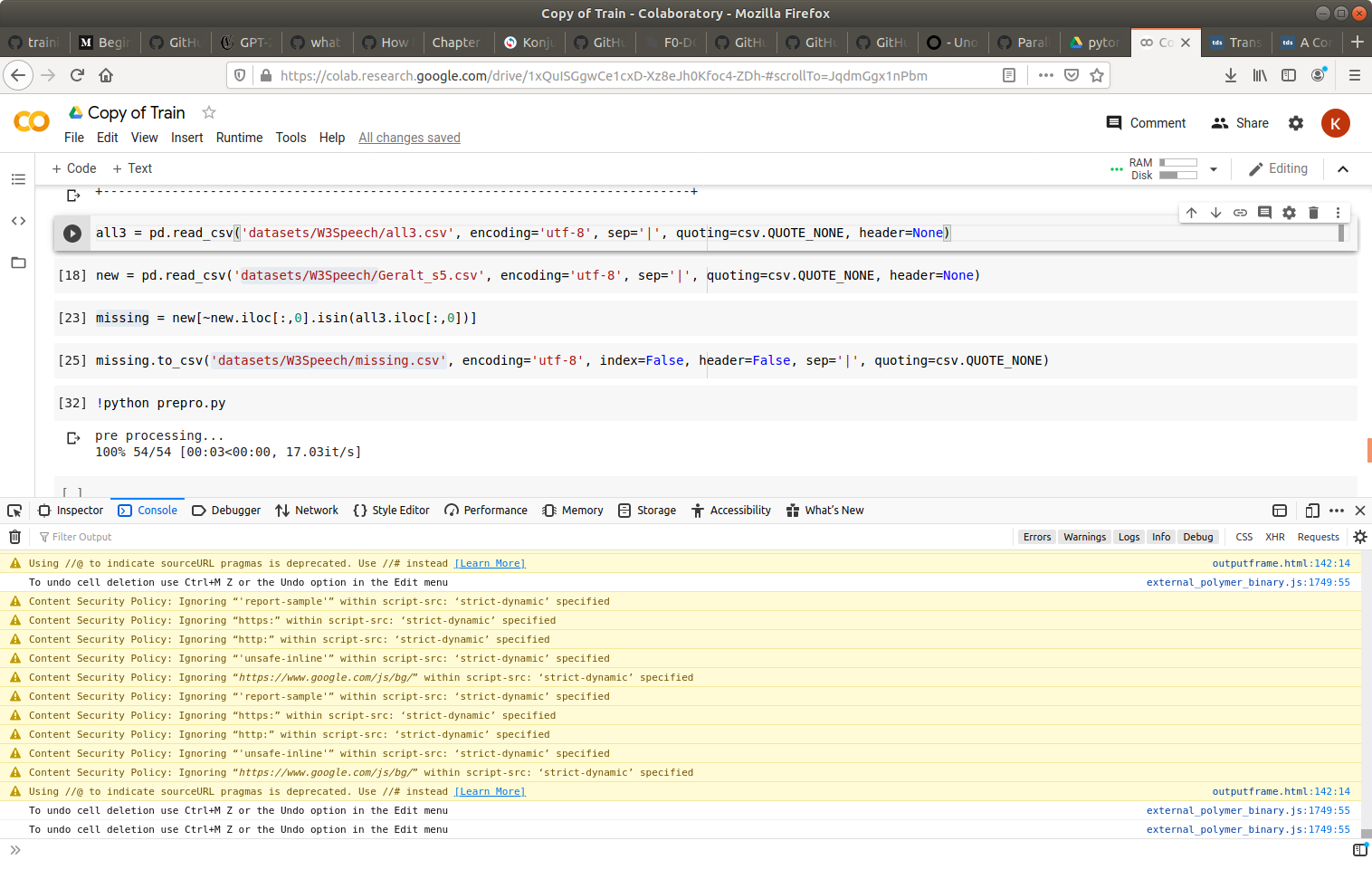

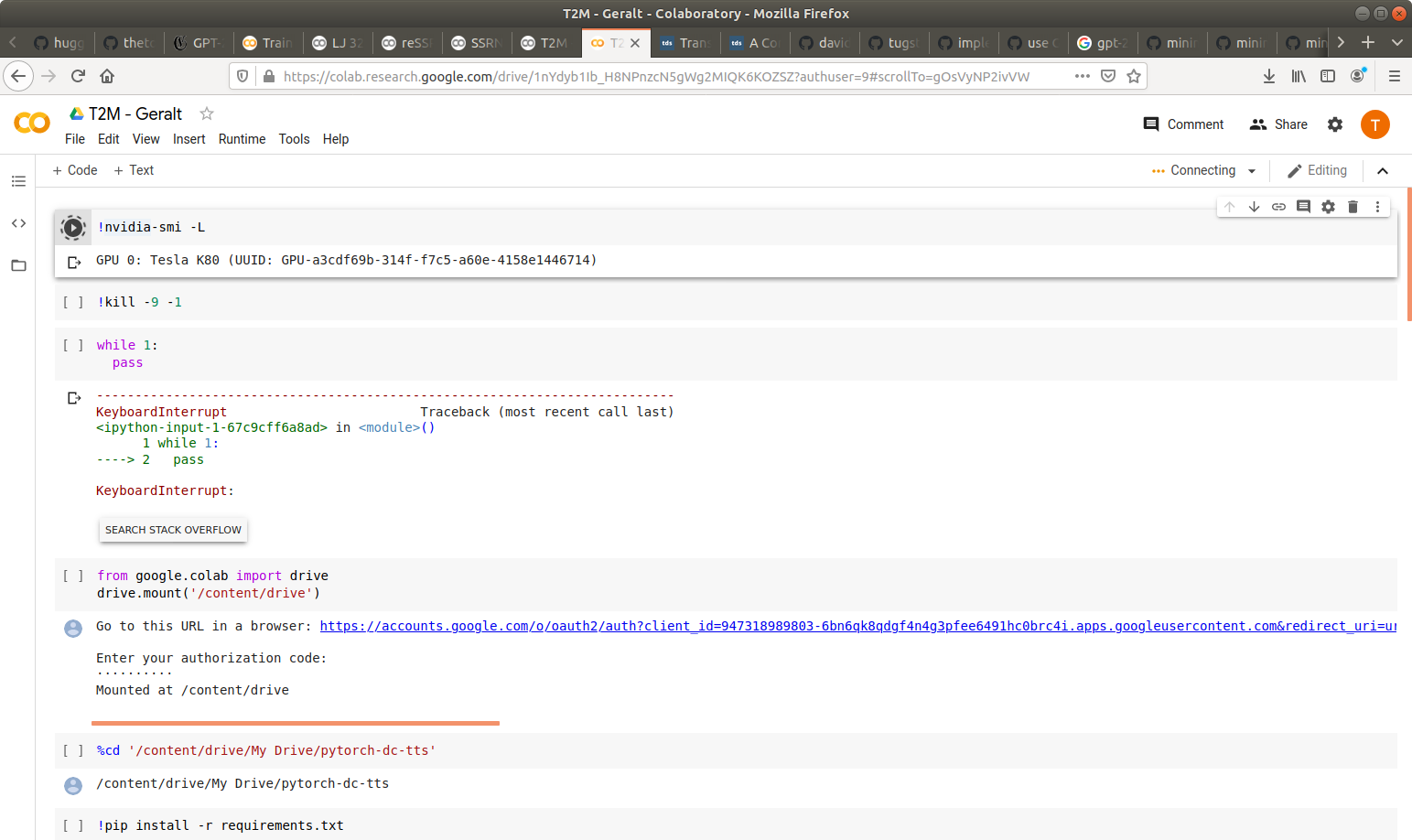

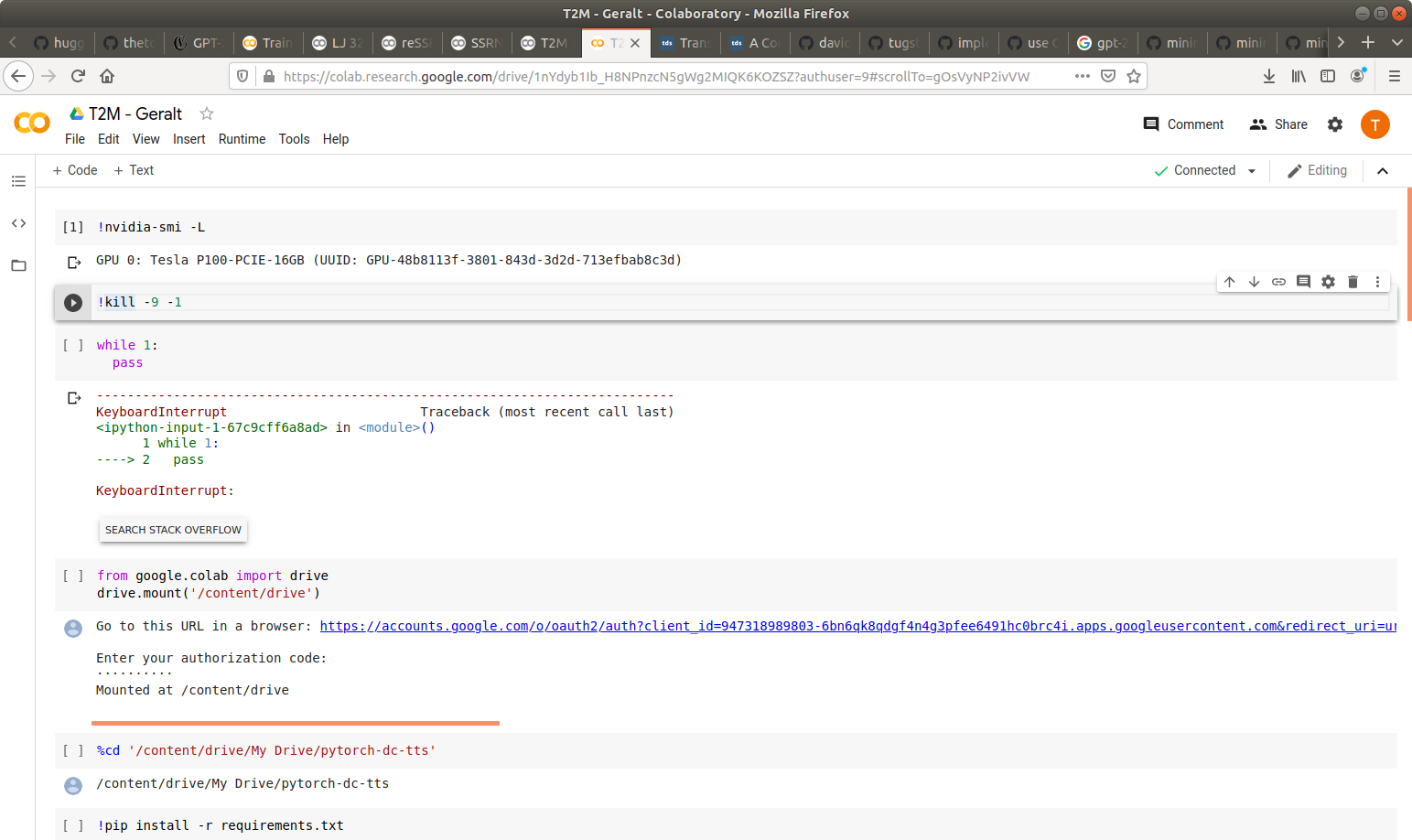

running on colab, and run a script to insert new cell frequently so that I won’t get disconnected.

This time I get K80 GPU, which is not the fastest

So I disconnect until I get a P100 GPU